%load_ext autoreload

%autoreload 2Introduction

In this blog I will implement kernel logistic regression. Kernel logistic regression uses a kernel function to calculate the weights and is often used when there are too many data points for the regular regression model to be sucessful. Throughout this blog post, I will perform many experiments that show how my kernel logistic regression model performs regularily and while changing different aspects such as the gamma and noise. One note about my code is that on each experiment I run with my model I am getting a warning error that I have been unsucessful in figuring out how to get it to go away.

Code Explanation

The goal of my code is to find the values of v that minimize the empicical loss. In the fit method of my code, I first pad x and then compute a kernel matrix using the kernel function as described in the init method. The kernel matrix encodes the similarity amongst data points in the trainin set. Next, after initializing a value for v, the method uses the scipy.optimize.minimize() function to minimize the empirical risk in terms of the weight vectors, v.

In my model, the empirical risk is the mean of the logistic loss function which measures the difference between the models predictions and the actual labels of the training data. Empirical loss is calculated uses the logistic loss function. First, it computes the predictions using the kernel matrix and weight vector, v. Next, it plugs the predictions into the logistic loss function to compare the predicted values with the true values of y. Finally, it takes the mean of the value obtained using logistic loss.

Initial experiments

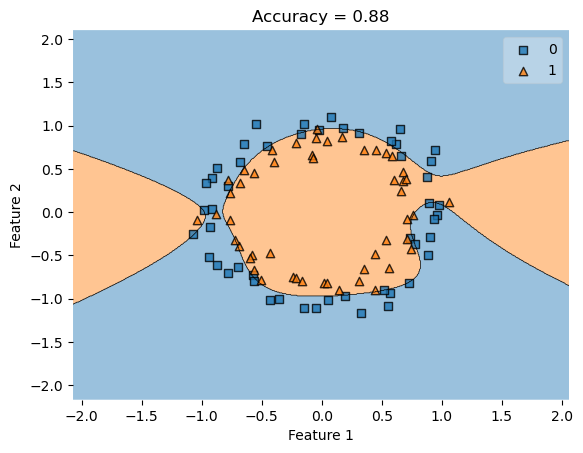

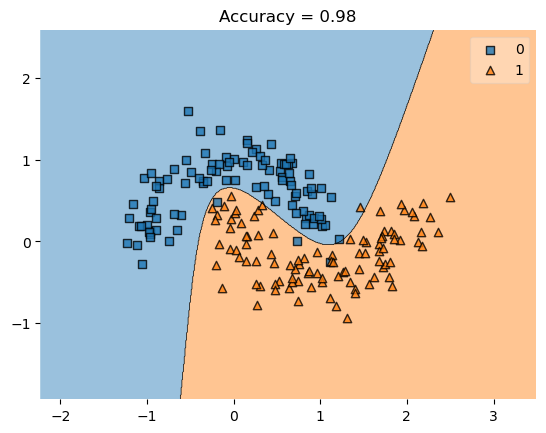

Below, I run two experiements with the code provided in the blog post assignment. If my kernel logistic regression model is running properly, it should produce an accuracy score at or above 90 percent. In both experiments, I create a set of random data to run my kernel logistic regression model on. With the first set of random data, the model produces an acurracy score of 98% and with the second set of random data, the model produced an accuracy score of 97.5%. This is a strong indication that my model is performming how it is supposed to, and it is ready to move onto other experiments where aspects of the inputs are altered.

from KernelLogisticRegression import KernelLogisticRegression # your source code

from sklearn.metrics.pairwise import rbf_kernel

from sklearn.datasets import make_moons, make_circles

from mlxtend.plotting import plot_decision_regions

from matplotlib import pyplot as plt

X, y = make_moons(200, shuffle = True, noise = 0.2)

KLR = KernelLogisticRegression(rbf_kernel, gamma = .1)

KLR.fit(X, y)

plot_decision_regions(X, y, clf = KLR)

score = plt.gca().set_title(f"Accuracy = {KLR.score(X, y)}")

print(KLR.score(X, y))/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))0.98

from KernelLogisticRegression import KernelLogisticRegression # your source code

from sklearn.metrics.pairwise import rbf_kernel

from sklearn.datasets import make_moons, make_circles

X, y = make_moons(200, shuffle = True, noise = 0.2)

KLR = KernelLogisticRegression(rbf_kernel, gamma = .1)

KLR.fit(X, y)

plot_decision_regions(X, y, clf = KLR)

score = plt.gca().set_title(f"Accuracy = {KLR.score(X, y)}")

print(KLR.score(X, y))/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))0.975

Choosing Gamma

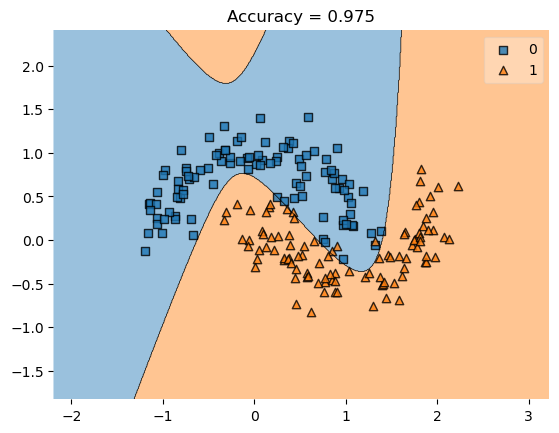

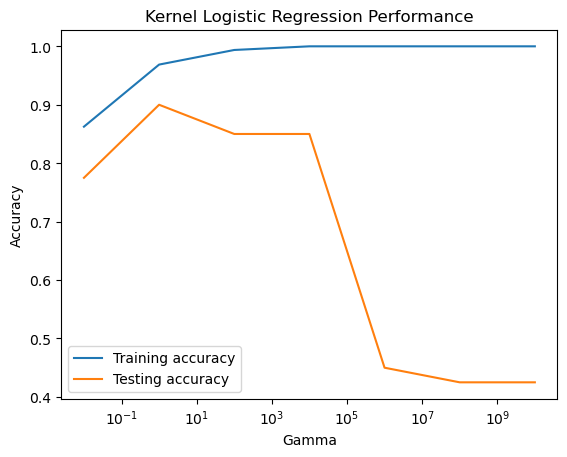

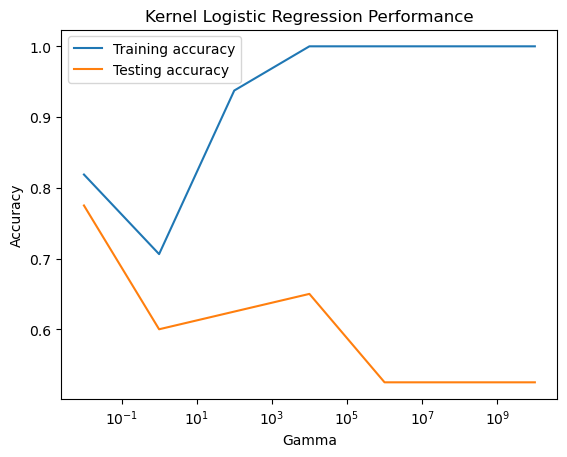

Next, I will experiment with changing the values of gamma. A very large gamma value allows for a perfect training accuracy, as it overfits the training data. However, the specificity to which it creates the regions for each data point with a high gamma value results in a low testing accuracy which is ulitmately more important than achieving a perfect training score. The first example below shows a situation with an extremely high gamma value that results in a perfect training score but would result in a poor valididation score. Next, I run an experiment varying the gamma value and creating a plot that displays the contrast between the training score and the validation score as the value of gamma increases. The experiment runs across the range from 10^-2 to 10^10. The graph shows that as gamma increases until 10^1, the validation score increases. However, once we get past a gamma value of 10, the validation score rapidly decreases while the training score increases to 100%. This shows that when implementing the kernel logistic regresion model, it is better to use a smaller value of gamma even if it means a smaller training score, as it will result in a greater validation score which is what we want.

from sklearn.datasets import make_moons, make_circles

from matplotlib import pyplot as plt

from mlxtend.plotting import plot_decision_regions

KLR = KernelLogisticRegression(rbf_kernel, gamma = 1000000)

KLR.fit(X, y)

print(KLR.score(X, y))

plot_decision_regions(X, y, clf = KLR)

t = title = plt.gca().set(title = f"Accuracy = {KLR.score(X, y)}",

xlabel = "Feature 1",

ylabel = "Feature 2")/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))1.0

from sklearn.model_selection import train_test_split

from sklearn.datasets import make_moons, make_circles

from sklearn.metrics.pairwise import rbf_kernel

import matplotlib.pyplot as plt

import numpy as np

from KernelLogisticRegression import KernelLogisticRegression # your source code

X, y = make_moons(200, shuffle = True, noise = 0.2)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1)

#range from 10^-2 to 10^10

gamma_range = np.logspace(-2, 10, num=7)

train_arr = np.zeros_like(gamma_range)

test_arr = np.zeros_like(gamma_range)

for i, gamma in enumerate(gamma_range):

model = KernelLogisticRegression(rbf_kernel, gamma=gamma)

model.fit(X_train, y_train)

train_arr[i] = model.score(X_train, y_train)

test_arr[i] = model.score(X_test, y_test)

plt.semilogx(gamma_range, train_arr, label='Training accuracy')

plt.semilogx(gamma_range, test_arr, label='Testing accuracy')

plt.xlabel('Gamma')

plt.ylabel('Accuracy')

plt.title('Kernel Logistic Regression Performance')

plt.legend()

plt.show()/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

Varying Noise

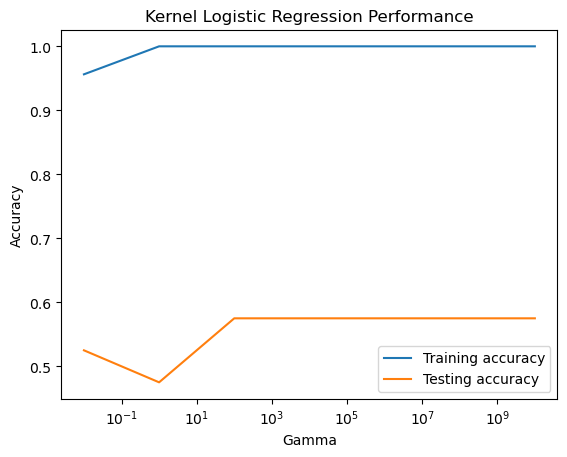

Our next experiments will involve varying the noise when creating our data set. Varying the noise affects how spread out our moon cresents are. Below I will perform two experiments. The first is with a small noise value of 0.5. In this experiment, The validation score decreases from the beginning with a slight increase at the 10^4th gamma value before decreasing agian and leveling out at about 0.5. The training score takes an intial dip at 10^0 unlike the experiment above, before increasing and again reaching a final training score of 100%. Next, I perform an experiment with a large noise value of 100. This experiment shows different results than each of the last two graphs. In this graph, the validation score takes an intial dip to 50% at 10^0 gamma value before going back up to 60% at 10^1 gamma value and staying at 60% for the rest of the experiment. The training accuracy reached 100% at a gamma value of 10 and stayed at 100% for the remainder of the experiment. From these two experiments, we see that a smaller noise is better from smaller values of gamma, but a greater noise is better for larger gamma values.

from sklearn.model_selection import train_test_split

from sklearn.datasets import make_moons, make_circles

from sklearn.metrics.pairwise import rbf_kernel

import matplotlib.pyplot as plt

import numpy as np

from KernelLogisticRegression import KernelLogisticRegression # your source code

X, y = make_moons(200, shuffle = True, noise = 0.5)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1)

#range from 10^-2 to 10^10

gamma_range = np.logspace(-2, 10, num=7)

train_arr = np.zeros_like(gamma_range)

test_arr = np.zeros_like(gamma_range)

for i, gamma in enumerate(gamma_range):

model = KernelLogisticRegression(rbf_kernel, gamma=gamma)

model.fit(X_train, y_train)

train_arr[i] = model.score(X_train, y_train)

test_arr[i] = model.score(X_test, y_test)

plt.semilogx(gamma_range, train_arr, label='Training accuracy')

plt.semilogx(gamma_range, test_arr, label='Testing accuracy')

plt.xlabel('Gamma')

plt.ylabel('Accuracy')

plt.title('Kernel Logistic Regression Performance')

plt.legend()

plt.show()/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

from sklearn.model_selection import train_test_split

from sklearn.datasets import make_moons, make_circles

from sklearn.metrics.pairwise import rbf_kernel

import matplotlib.pyplot as plt

import numpy as np

from KernelLogisticRegression import KernelLogisticRegression # your source code

X, y = make_moons(200, shuffle = True, noise = 100)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1)

#range from 10^-2 to 10^10

gamma_range = np.logspace(-2, 10, num=7)

train_arr = np.zeros_like(gamma_range)

test_arr = np.zeros_like(gamma_range)

for i, gamma in enumerate(gamma_range):

model = KernelLogisticRegression(rbf_kernel, gamma=gamma)

model.fit(X_train, y_train)

train_arr[i] = model.score(X_train, y_train)

test_arr[i] = model.score(X_test, y_test)

plt.semilogx(gamma_range, train_arr, label='Training accuracy')

plt.semilogx(gamma_range, test_arr, label='Testing accuracy')

plt.xlabel('Gamma')

plt.ylabel('Accuracy')

plt.title('Kernel Logistic Regression Performance')

plt.legend()

plt.show()/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

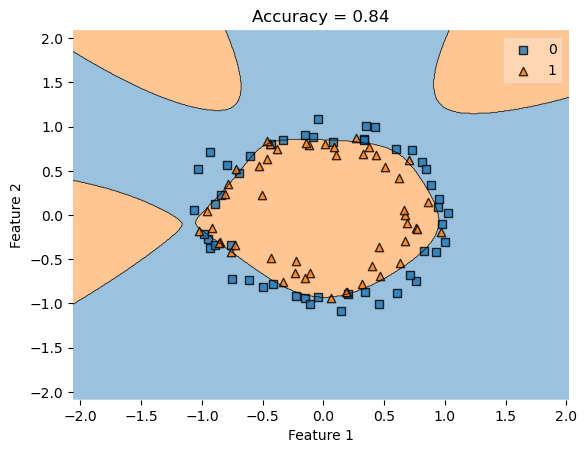

Concentric Circles

Below I will implement my kernel logistic regression model with data that forms concentric circles to see how it performs. It was difficult to find values of noise and gamma that would produce a model that learned well enough to perform well on testing data. What I found is that smaller values of both gamma and noise produced better training scores without overfitting the model.

from sklearn.datasets import make_moons, make_circles

from matplotlib import pyplot as plt

from mlxtend.plotting import plot_decision_regions

X, y = make_circles(n_samples=100, shuffle=True, noise=0.1)

KLR = KernelLogisticRegression(rbf_kernel, gamma = 0.5)

KLR.fit(X, y)

print(KLR.score(X, y))

plot_decision_regions(X, y, clf = KLR)

t = title = plt.gca().set(title = f"Accuracy = {KLR.score(X, y)}",

xlabel = "Feature 1",

ylabel = "Feature 2")

0.84

from sklearn.datasets import make_moons, make_circles

from matplotlib import pyplot as plt

from mlxtend.plotting import plot_decision_regions

X, y = make_circles(n_samples=100, shuffle=True, noise=0.1)

KLR = KernelLogisticRegression(rbf_kernel, gamma = 2)

KLR.fit(X, y)

print(KLR.score(X, y))

plot_decision_regions(X, y, clf = KLR)

t = title = plt.gca().set(title = f"Accuracy = {KLR.score(X, y)}",

xlabel = "Feature 1",

ylabel = "Feature 2")/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:33: RuntimeWarning: overflow encountered in exp

return 1 / (1 + np.exp(-z))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: divide by zero encountered in log

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))

/Users/ceceziegler/Desktop/CeceZiegler1.github.io/posts/KernelBlog/KernelLogisticRegression.py:36: RuntimeWarning: invalid value encountered in multiply

return -y*np.log(self.sigmoid(y_hat)) - (1-y)*np.log(1-self.sigmoid(y_hat))0.88